How good is the GPT-3 "neural network"? Our team has tested it.

Is it possible to write and read code via artificial intelligence? How can AI driven text generation support our daily work? These are some of the questions we examined at our recent hackathon, where we tested what GPT-3 from OpenAI can do.

Was ist GPT-3?

GPT-3 is a neural network from OpenAI, which is trained on large amounts of text from the Internet. GPT-3 can write and predict text. It does this by combining the input you give it, with the data it has already been trainedwith in advance.

The following is an example of how it works and the breadth of what it can be used for.The grey box is the input given GPT-3. The green box shows what GPT-3 considers to be the next natural thing in the input text. In this case 💥🌟 as a description of Star Wars.

We find it particularly interesting that GPT-3 can translate plain text into code and code into plain text. This blog describes the attempts we did during a hackathon and what we came up with. Our goal was to see how GPT-3 could help us in our daily code work.

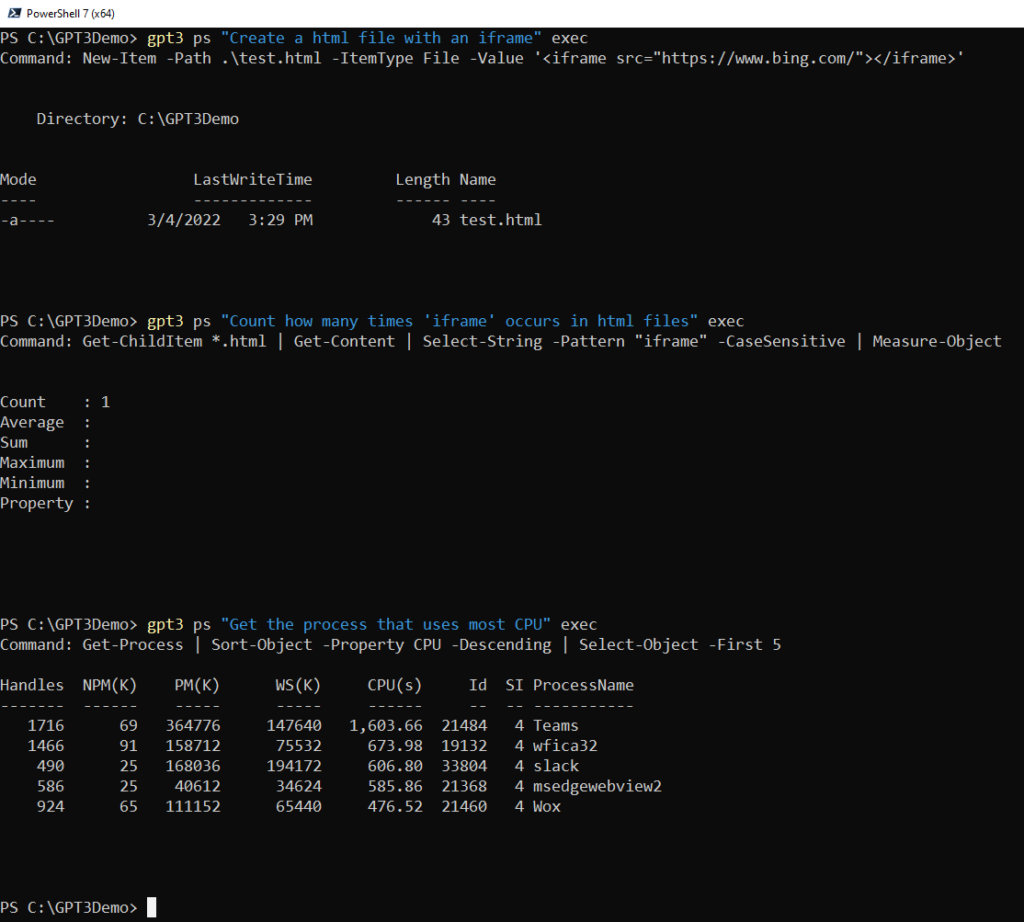

Experiment # 1 - Text for PowerShell

We started by testing whether the GPT-3 could suggest PowerShell commands based on what we asked it to do with plain text. Below is the result and as it appears, it actually comes quite close.

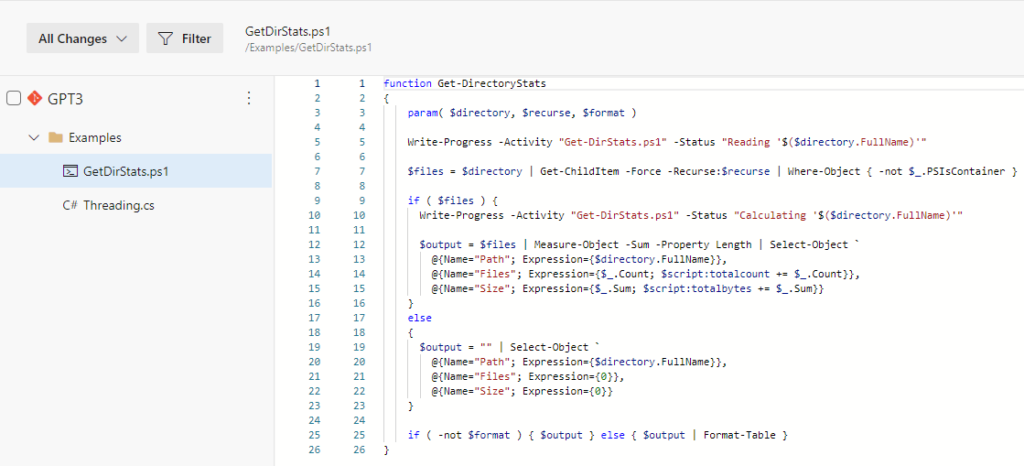

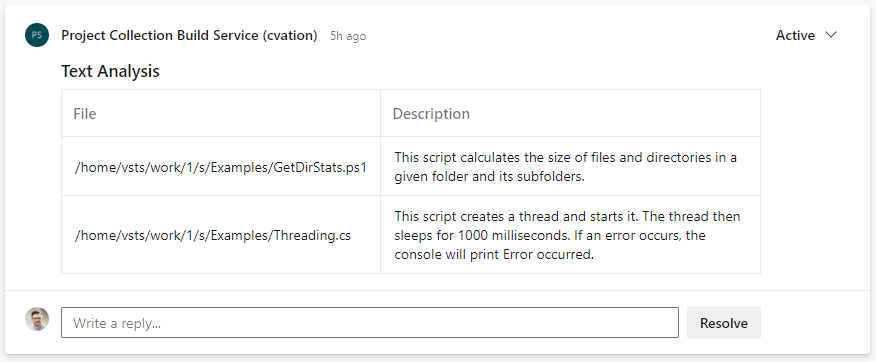

Experiment # 2 - Explain the code in a pull request

GPT-3 can translate code into plain text. We have built this into a pipeline, so that you as a reviewer get help to understand what the code does.

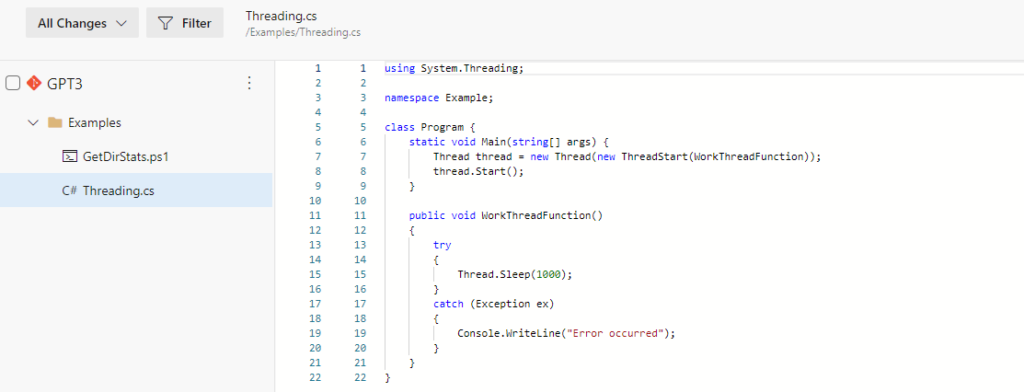

We have tried a powershell script and a very simple C # program, as can be seen below.

When the code commits, it triggers a build validation pipeline, which with powershell examines what has changed in the code and sends the code to the OpenAI API. The response from the OpenAI API is saved as a comment on pull requested, as seen below.

The results:

-

This script calculates the size of files and directories in a folder and its subfolders.

-

This script creates a thread and starts it. The thread then sleeps for 1000 milliseconds. If an error occurs, the console will print Error occurred.

That is actually what the script does. However, we also experience that GPT-3 can come up with different results on the same code. For example, for the C # program, sometimes GPT-3 writes that the program says “Hello, world!” to the console. Which is obviously wrong.

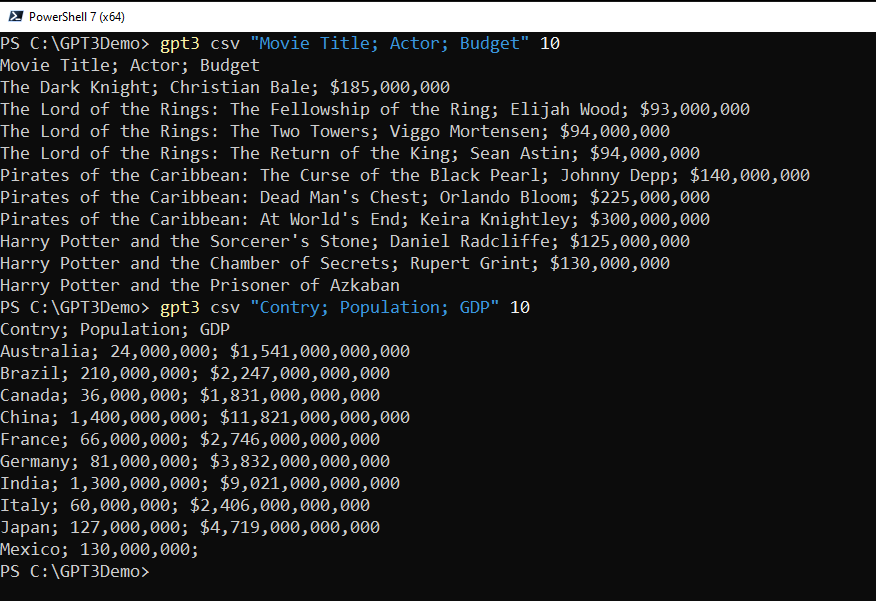

Experiment # 3 - Autogenerates test data

Last attempt was to generate test data. A classic problem for a system in the test phase is that you need realistic test data. Here GPT-3 is capable of decoding what one asks for and draw on information it has collected as part of the training. It can be seen in the example below.

Conclusion

We are quite impressed with how well GPT-3 hits in terms of explaining code and it is an interesting area to explore. It may fall short in more complex issues, but it is worth a try to go ahead and see if it will be useful in practice.

The generation of test data is also very positive, as it quickly generates large amounts of realistic test data, which is obviously needed in most IT projects.